Security

Introduction

We are becoming more and more digital today. We are not only digitizing our business but also our private life. We contact people online, send messages, share moments with friends, do our business, and organize our daily routine. At the same time, this shift means that more and more critical data is being digitized and processed privately and commercially. In this context, cybersecurity is also becoming more and more important as its goal is to safeguard users by offering availability, integrity and confidentiality of user data. When we look at today’s technology, we see that web resources are increasingly used to provide digitally delivered solutions. It also means that there is a strong link between our modern life and the security of web applications due to their widespread use.

This chapter analyzes the current state of security on the web and gives an overview of methods that the web community uses (and misses) to protect their environment. More specifically, in this report, we analyze different metrics on Transport Layer Security (HTTPS), such as general implementation, protocol versions, and cipher suites. We also give an overview of the techniques used to protect cookies. You will then find a comprehensive analysis on the topic of content inclusion and methods for thwarting attacks (e.g., use of specific security headers). We also look at how the security mechanisms are adopted (e.g., by country or specific technology). We also discuss malpractices on the web, such as Cryptojacking and, finally we look at usage of security.txt URLs.

We crawl the analyzed pages in both desktop and mobile mode, but for a lot of the data they give similar results, so unless otherwise noted, stats presented in this chapter refer to the set of mobile pages. For more information on how the data has been collected, refer to the Methodology page.

Transport security

Following the recent trend, we see continuous growth in the number of websites adopting HTTPS this year as well. Transport Layer Security is important to allow secure browsing of websites by ensuring that the resources being served to you and the data sent to the website are untampered in the transit. Almost all major browsers now come with a HTTPS-only setting and increasing warnings are shown to users when HTTP is used by a website instead of HTTPS, thus pushing broader adoption forward.

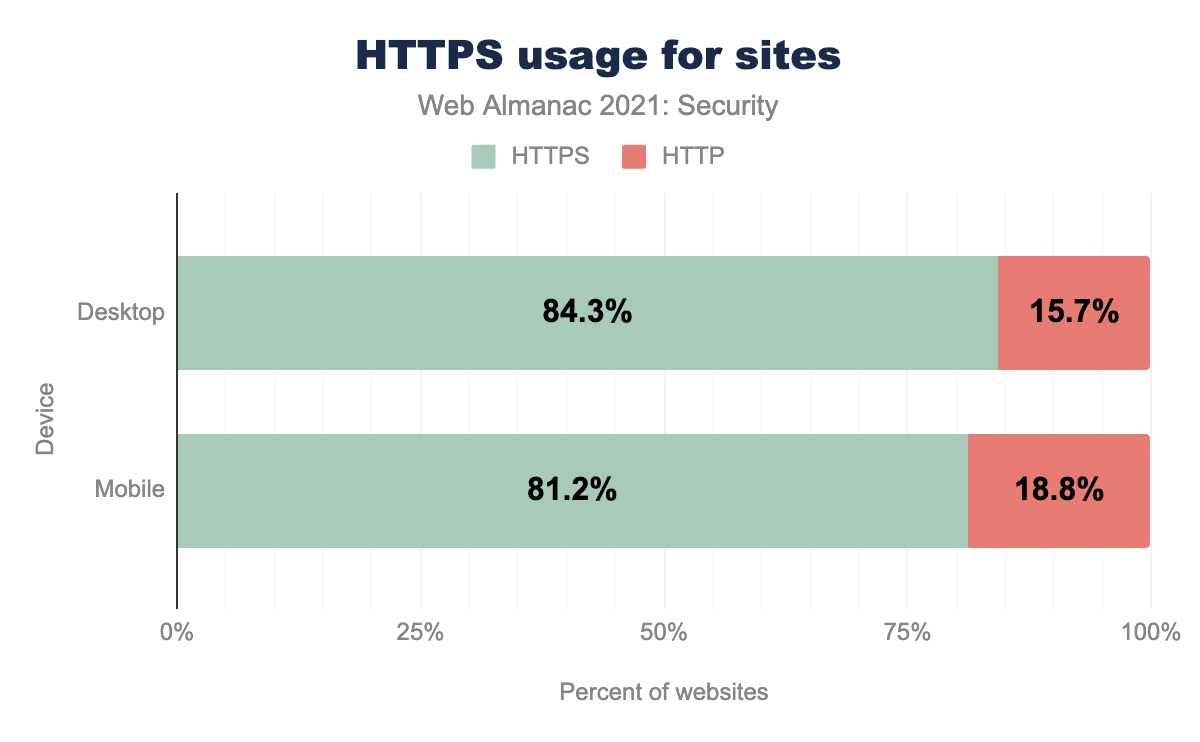

Currently, we see that 91.9% of total requests for websites on desktop and 91.1% for mobile are being served using HTTPS. We see an increasing number of certificates being issued every day thanks to non-profit certificate authorities like Let’s Encrypt.

Currently, 84.3% of website home pages in desktop and 81.2% of website home pages in mobile are served over HTTPS so we still see a gap between websites using HTTPS and requests using HTTPS. This is because a lot of the impressive percentage of HTTPS requests are often dominated by third-party services like fonts, analytics, CDNs, and not the initial web page itself.

We do see a continuous improvement in sites using HTTPS (approximately 7-8% increase since last year), but soon a lot of unmaintained websites might start seeing warnings once browsers start adopting HTTPS-only mode by default.

Protocol versions

Transport Layer Security (TLS) is the protocol that helps make HTTP requests secure and private. With time, new vulnerabilities are discovered and fixed in TLS. Hence, it’s not just important to serve a website over HTTPS but also to ensure that modern, up-to-date TLS configuration is being used to avoid such vulnerabilities.

As part of this effort to improve security and reliability by adopting modern versions, TLS 1.0 and 1.1 have been deprecated by the Internet Engineering Task Force (IETF) as of March 25, 2021. All upstream browsers have also either completely removed support or deprecated TLS 1.0 and 1.1. For example, Firefox has deprecated TLS 1.0 and 1.1 but has not completely removed it because during the pandemic, users might need to access government websites that often still run on TLS 1.0. The user may still decide to change security.tls.version.min in browser config to decide the lowest TLS version they want the browser to allow.

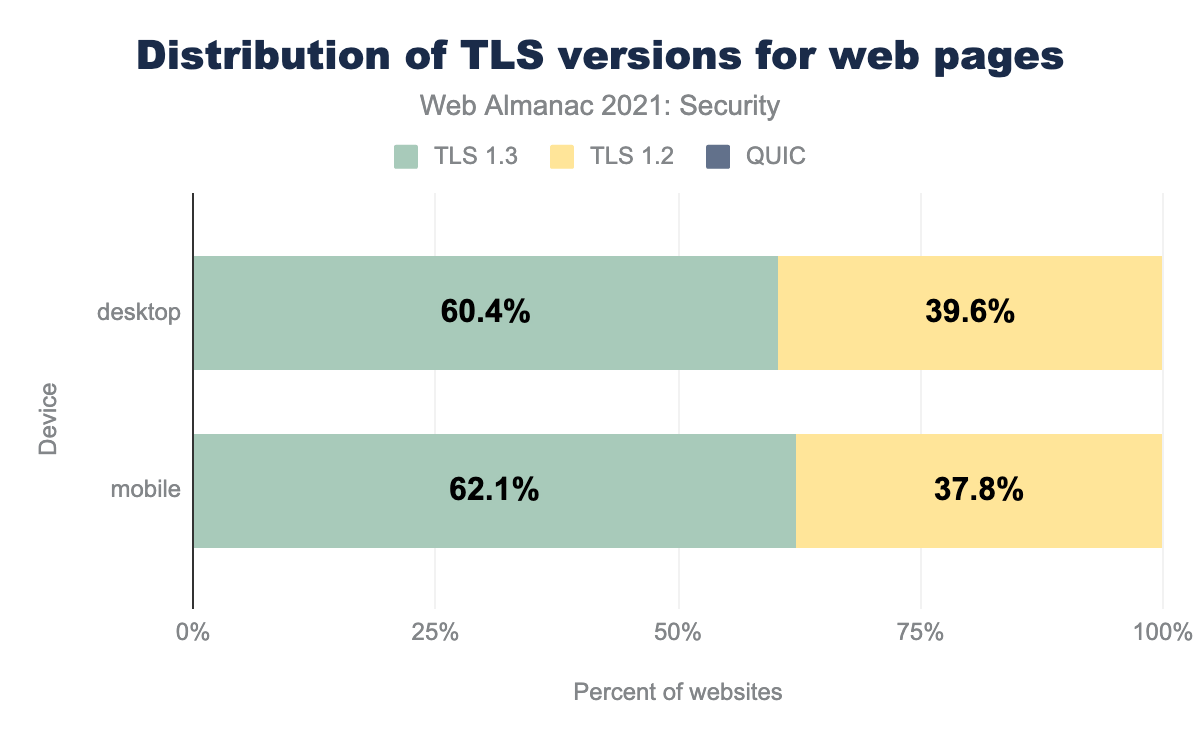

60.4% of pages in desktop and 62.1% of pages in mobile are now using TLSv1.3, making it the majority protocol version over TLSv1.2. The number of pages using TLSv1.3 has increased approximately 20% since last year when we saw 43.2% and 45.4% respectively.

Cipher suites

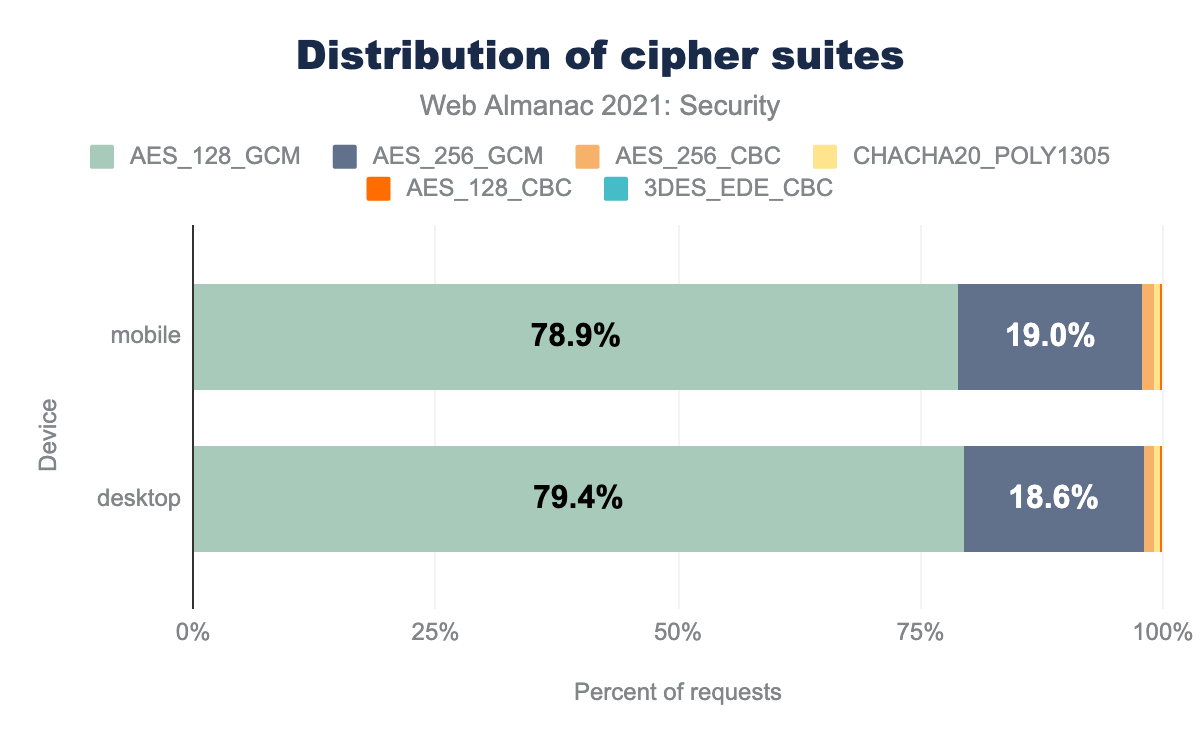

Cipher suites are a set of algorithms that are used with TLS to help make secure connections. Modern Galois/Counter Mode (GCM) cipher modes are considered to be much more secure compared to the older Cipher Block Chaining Mode (CBC) ciphers which have shown to be vulnerable to padding attacks. While TLSv1.2 did support use of both newer and older cipher suites, TLSv1.3 does not support any of the older cipher suites. This is one reason TLSv1.3 is the more secure option for connections.

Almost all modern cipher suites support Forward Secrecy key exchange, meaning in the case that the server’s keys are compromised, old traffic that used those keys cannot be decrypted. 96.6% in desktop and 96.8% in mobile use forward secrecy. TLSv1.3 has made forward secrecy compulsory though it is optional in TLSv1.2—yet another reason it is more secure.

The other consideration apart from the cipher mode is the key size of the Authenticated Encryption and Authenticated Decryption algorithm. A larger key size will take a lot longer to compromise and the intensive computations for encryption and decryption of the connection impose little to no perceptible impact to site performance

AES_128_GCM being the most common and used by 79.4% of desktop and 78.9% of mobile sites, AES_256_GCM is used by 18.6% of desktop and 19.0% of mobile sites, AES_256_CBC used by 1.1% of desktop sites and 1.2% of mobile sites, CHACHA20_POLY1305 is used by 0.6% and 0.7% of sites respectively, AES_128_CBC is used by 0.3% and 0.2% respectively, and 3DES_EDE_CBC is used by so few sites it appears as 0%.AES_128_GCM is still the most widely used cipher suite, by a long way, with 79.4% in desktop and 78.9% in mobile usage. AES_128_GCM indicates that it uses GCM cipher mode with Advanced Encryption Standard (AES) of key size 128-bit for encryption and decryption. 128-bit key size is still considered secured, but 256-bit size is slowly becoming the industry standard to better resist brute force attacks for a longer time.

Certificate Authorities

A Certificate Authority is a company or organization that issues digital certificates which helps validate the ownership and identity of entities on the web, like websites. A Certificate Authority is needed to issue a TLS certificate recognized by browsers so that the website can be served over HTTPS. Like the previous year, we will again look into the CAs used by websites themselves rather than third-party services and resources.

| Issuer | Algorithm | Desktop | Mobile |

|---|---|---|---|

| R3 | RSA | 46.9% | 49.2% |

| Cloudflare Inc ECC CA-3 | ECDSA | 11.7% | 11.5% |

| Sectigo RSA Domain Validation Secure Server CA | RSA | 8.3% | 8.2% |

| cPanel, Inc. Certification Authority | RSA | 5.0% | 5.5% |

| Go Daddy Secure Certificate Authority - G2 | RSA | 3.6% | 3.0% |

| Amazon | RSA | 3.4% | 3.0% |

| Encryption Everywhere DV TLS CA - G1 | RSA | 1.3% | 1.6% |

| AlphaSSL CA - SHA256 - G2 | RSA | 1.2% | 1.2% |

| RapidSSL TLS DV RSA Mixed SHA256 2020 CA-1 | RSA | 1.2% | 1.1% |

| DigiCert SHA2 Secure Server CA | RSA | 1.1% | 0.9% |

Let’s Encrypt has changed their subject common name from “Let’s Encrypt Authority X3” to just “R3” to save bytes in new certificates. So, any SSL certificates signed by R3 are issued by Let’s Encrypt. Thus, like previous years, we see Let’s Encrypt continue to lead the charts with 46.9% of desktop websites and 49.2% of mobile sites using certificates issued by them. This is up 2-3% from last year. Its free, automated certificate generation has played a game-changing role in making it easier for everyone to serve their websites over HTTPS.

Cloudflare continues to be in second position with its similarly free certificates for its customers. Also, Cloudflare CDNs increase the usage of Elliptic Curve Cryptography (ECC) certificates which are smaller and more efficient than RSA certificates but are often difficult to deploy, due to the need to also continue to serve non-ECC certificates to older clients. Using a CDN like Cloudflare takes care of that complexity for you. All the latest browsers are compatible with ECC certificates, though some browsers like Chrome depend on the OS. So, if someone uses Chrome in an old OS like Windows XP, then they need to fall back to non-ECC certificates.

HTTP Strict Transport Security

HTTP Strict Transport Security (HSTS) is a response header that tells the browser that it should always use secure HTTPS connections to communicate with the website.

The Strict-Transport-Security header helps convert a http:// URL to a https:// URL before a request is made for that site. 22.2% of the mobile responses and 23.9% of desktop responses have a HSTS header.

| HSTS Directive | Desktop | Mobile |

|---|---|---|

Valid max-age |

92.7% | 93.4% |

includeSubdomains |

34.5% | 33.3% |

preload |

17.6% | 18.0% |

Out of the sites with HSTS header, 92.7% in desktop and 93.4% in mobile have a valid max-age (that is, the value is non-zero and non-empty) which determines how many seconds the browser should only visit the website over HTTPS.

33.3% of request responses for mobile, and 34.5% for desktop include includeSubdomain in the HSTS settings. The number of responses with the preload directive is lower because it is not part of the HSTS specification and needs a minimum max-age of 31,536,000 seconds (or 1 year) and also the includeSubdomain directive to be present.

max-age attribute, converted to days. In the 10th percentile both desktop and mobile are 30 days, in the 25th percentile both are 182 days, in the 50th percentile both are 365 days, the 75th percentile is the same at 365 days for both and the 90th percentile has 730 days for both.max-age values for all requests (in days).

The median value for max-age attribute in HSTS headers over all requests is 365 days in both mobile and desktop. https://hstspreload.org/ recommends a max-age of 2 years once the HSTS header is set up properly and verified to not cause any issues.

Cookies

An HTTP cookie is a small piece of information about the user accessing the website that the server sends to the web browser. Browsers store this information and send it back with subsequent requests to the server. Cookies help in session management to maintain state information of the user, such as if the user is currently logged in.

Without properly securing cookies, an attacker can hijack a session and send unwanted changes to the server by impersonating the user. It can also lead to Cross-Site Request Forgery attacks, whereby the user’s browser inadvertently sends a request, including the cookies, unbeknownst to the user.

Several other types of attacks rely on the inclusion of cookies in cross-site requests, such as Cross-Site Script Inclusion (XSSI) and various techniques in the XS-Leaks vulnerability class.

You can ensure that cookies are sent securely and aren’t accessed by unintended parties or scripts by adding certain attributes or prefixes.

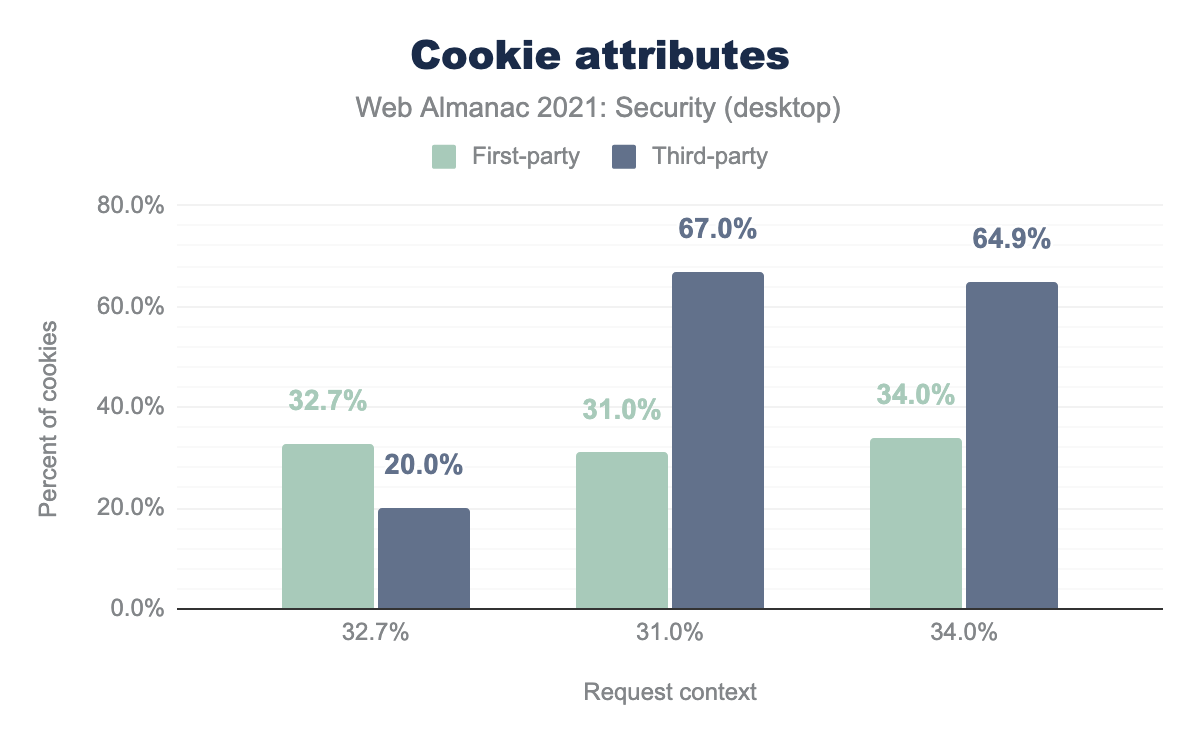

HttpOnly is used by 32.7%, Secure by 31.0%, and SameSite by 34.1%, while for third-party HttpOnly is used by 20.0%, Secure by 67.0%, and SameSite by 64.9%.

Secure

Cookies that have the Secure attribute set will only be sent over a secure HTTPS connection, preventing them from being stolen in a Manipulator-in-the-middle attack. Similar to HSTS, this also helps enhance the security provided by TLS protocols. For first-party cookies, just over 30% of the cookies in both desktop and mobile have the Secure attribute set. However, we do see a significant increase in the percentage of third-party cookies in desktop having the Secure attribute from 35.2% last year to 67.0% this year. This increase is likely due to the Secure attribute being a requirement for SameSite=none cookies, that we will discuss below.

HttpOnly

A cookie that has the HttpOnly attribute set cannot be accessed through the document.cookie API in JavaScript. Such cookies can only be sent to the server and helps in mitigating client-side Cross-Site Scripting (XSS) attacks that misuse the cookie. It’s used for cookies that are only needed for server-side sessions. The percentage of cookies with HttpOnly attribute has a smaller difference between first-party cookies and third-party compared to the other cookie attributes being used by 32.7% and 20.0% respectively.

SameSite

The SameSite attribute in cookies allows the websites to inform the browser when and whether to send a cookie with cross-site requests. This is used to prevent cross-site request forgery attacks. SameSite=Strict allows the cookie to be sent only to the site where it originated. With SameSite=Lax, cookies are not sent to cross-site requests unless a user is navigating to the origin site by following a link. SameSite=None means cookies are sent in both originating and cross-site requests.

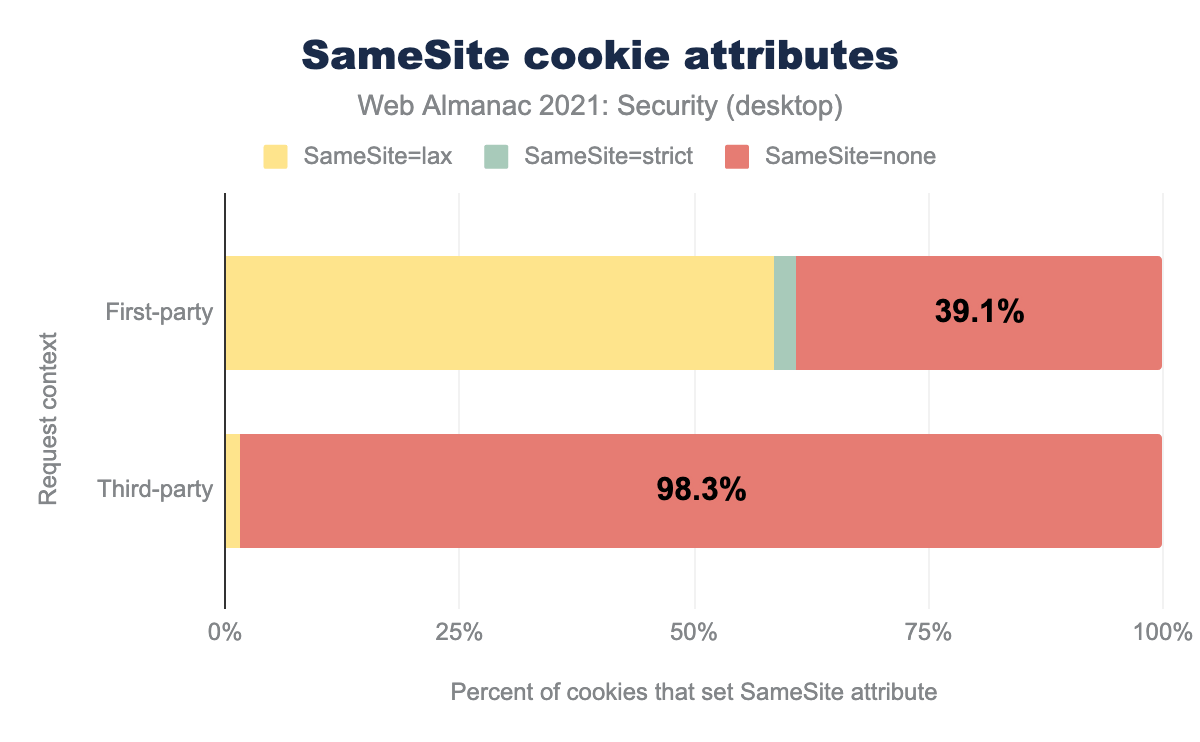

SameSite=lax is used by 58.5%, SameSite=strict by 2.5%, and SameSite=none by 39.1%, while for third-party SameSite=lax is used by 1.5%, SameSite=strict by 0.1%, and SameSite=none by 98.3%.We see that 58.5% of all first-party cookies with a SameSite attribute have the attribute set to Lax while there is still a pretty daunting 39.1% cookies where SameSite attribute is set to none—although the number is steadily decreasing. Almost all current browsers now default to SameSite=Lax if no SameSite attribute is set. Approximately 65% of overall first-party cookies have no SameSite attribute.

Prefixes

Cookie prefixes __Host- and __Secure- help mitigate attacks to override the session cookie information for a session fixation attack. __Host- helps in domain locking a cookie by requiring the cookie to also have Secure attribute, Path attribute set to /, not have Domain attribute and to be sent from a secure origin. __Secure- on the other hand requires the cookie to only have Secure attribute and to be sent from a secure origin.

| Type of cookie | __Secure |

__Host |

|---|---|---|

| First-party | 0.02% | 0.01% |

| Third-party | < 0.01% | 0.03% |

__Secure and __Host cookie prefixes in mobile.

Though both the prefixes are used in a significantly lower percentage of cookies, __Secure- is more commonly found in first-party cookies due to its lower prerequisites.

Cookie age

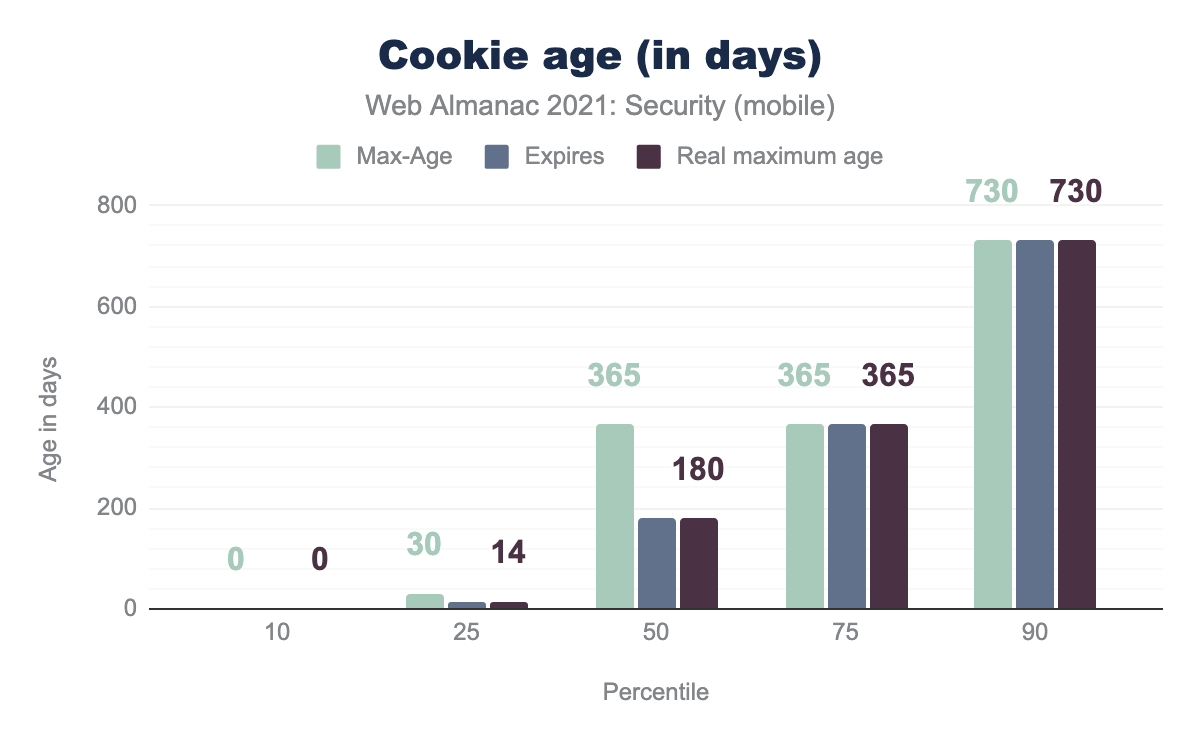

Permanent cookies are deleted at a date specified by the Expires attribute, or after a period of time specified by the Max-Age attribute. If both Expires and Max-Age are set, Max-Age has precedence.

Max-Age of 0, and an Expires of 0, means the real max age is still 0. At the 10th percentile a value of 30 days and 14 days for the two values, means the real age is 14 days set by the We see that the median Max-Age is 365 days, as we see about 20.5% of the cookies with Max-Age have the value 31,536,000. However, 64.2% of the first-party cookies have Expires and 23.3% have Max-Age. Since Expires is much more dominant among cookies, the median for real maximum age is the same as Expires (180 days) instead of Max-Age as you would expect.

Content inclusion

Most websites have quite a lot of media and CSS or JavaScript libraries that more often than not are loaded from various different external sources, CDNs or cloud storage services. It’s important for the security of the website as well as the security of the users of a website to ensure which source of content can be trusted. Otherwise, the website is vulnerable to cross-site scripting attacks if untrusted content gets loaded.

Content Security Policy

Content Security Policy (CSP) is the predominant method used to mitigate cross-site scripting and data injection attacks by restricting the origins allowed to load various content. There are numerous directives that can be used by the website to specify sources for different kinds of content. For instance, script-src is used to specify origins or domains from which scripts can be loaded. It also has other values to define if inline scripts and eval() functions are allowed.

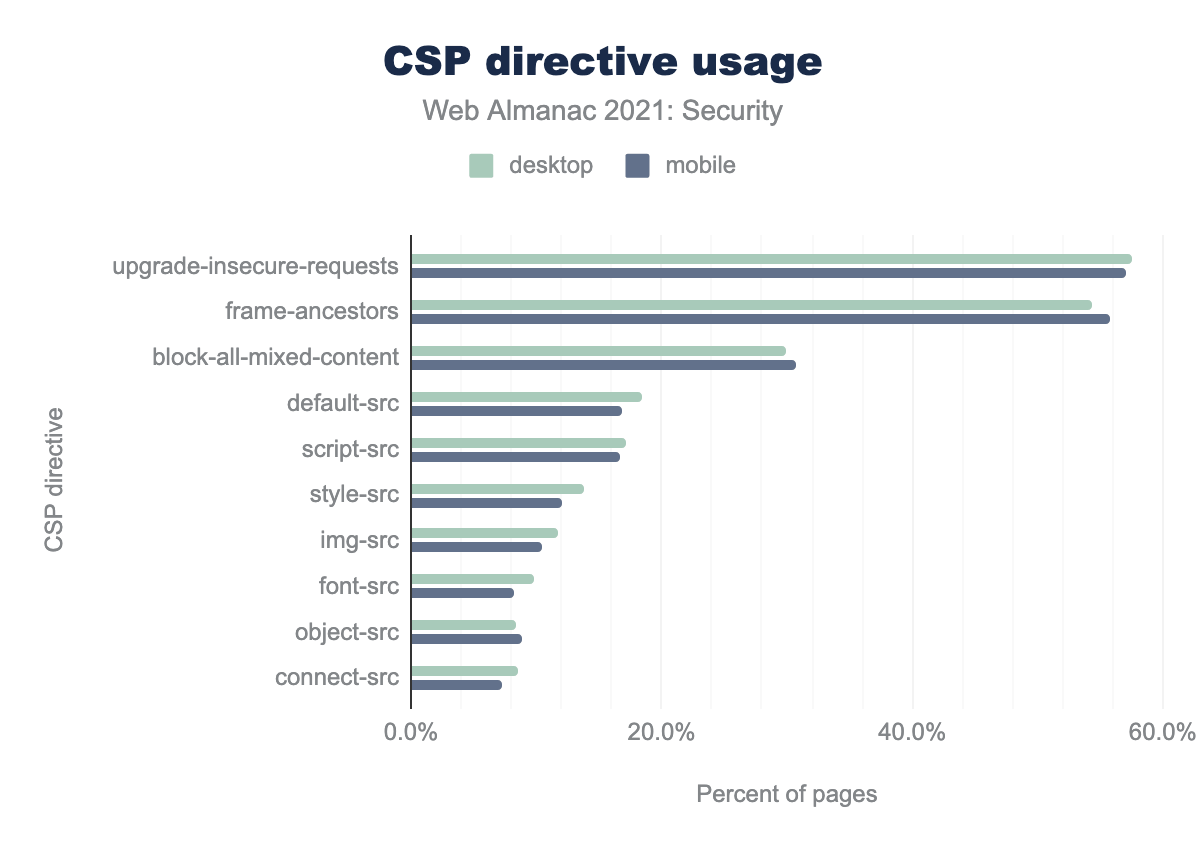

upgrade-insecure-requests is the most common with 57.6% in desktop and 57.1% in mobile, followed by frame-ancestors which is 54.4% in desktop and 55.8% in mobile. block-all-mixed-content is 29.9% in desktop and 30.7% in mobile, default-src is 18.4% in desktop and 16.8% in mobile, script-src is 17.2% in desktop and 16.7% in mobile, style-src is 13.8% in desktop and 12.0% in mobile, img-src is 11.8% in desktop and 10.4% in mobile, font-src is 9.8% in desktop and 8.2% in desktop, object-src is 8.3% in desktop and 8.9% in mobile, connect-src is 8.5% in desktop and 7.2% in mobileWe see more and more websites starting to use CSP with 9.3% home pages on mobile using CSP now compared to 7.2% last year. upgrade-insecure-requests continues to be the most frequent CSP used. The high adoption rate for this policy is likely because of the same reasons mentioned last year; it is an easy, low-risk, policy that helps in upgrading all HTTP requests to HTTPS and also helps with to block mixed content being used on the page. frame-ancestors is a close second, which helps one define valid parents that may embed a page.

The adoption of policies defining the sources from which content can be loaded continues to be low. Most of these policies are more difficult to implement, as they can cause breakages. They require effort to implement to define nonce, hashes or domains for allowing external content.

While a strict CSP is a strong defense against attacks, they can lead to undesirable effects and prevent valid content from loading, if the policy is incorrectly defined. Different libraries and APIs loading further content makes this even more difficult.

Lighthouse recently started flagging severity warnings when such directives are missing from CSP, encouraging people to adopt a stricter CSP to prevent XSS attacks. We will discuss more about how CSP helps in stopping XSS attacks in the thwarting attacks section of this chapter.

To allow web developers to evaluate the correctness of their CSP policy, there is also a non-enforcing alternative, which can be enabled by defining the policy in the Content-Security-Policy-Report-Only response header. The prevalence of this header is still fairly small: 0.9% in mobile. However, most of the time this header is added in the testing phase and later is replaced by the enforcing CSP, so the low usage is not unexpected.

Sites can also use the report-uri directive to report any CSP violations to a particular link that is able to parse the CSP errors. These can help after a CSP directive has been added to check if any valid content is accidentally being blocked by the new directive. The drawback of this powerful feedback mechanism is that CSP reporting can be noisy due to browser extensions and other technology outside of the website owner’s control.

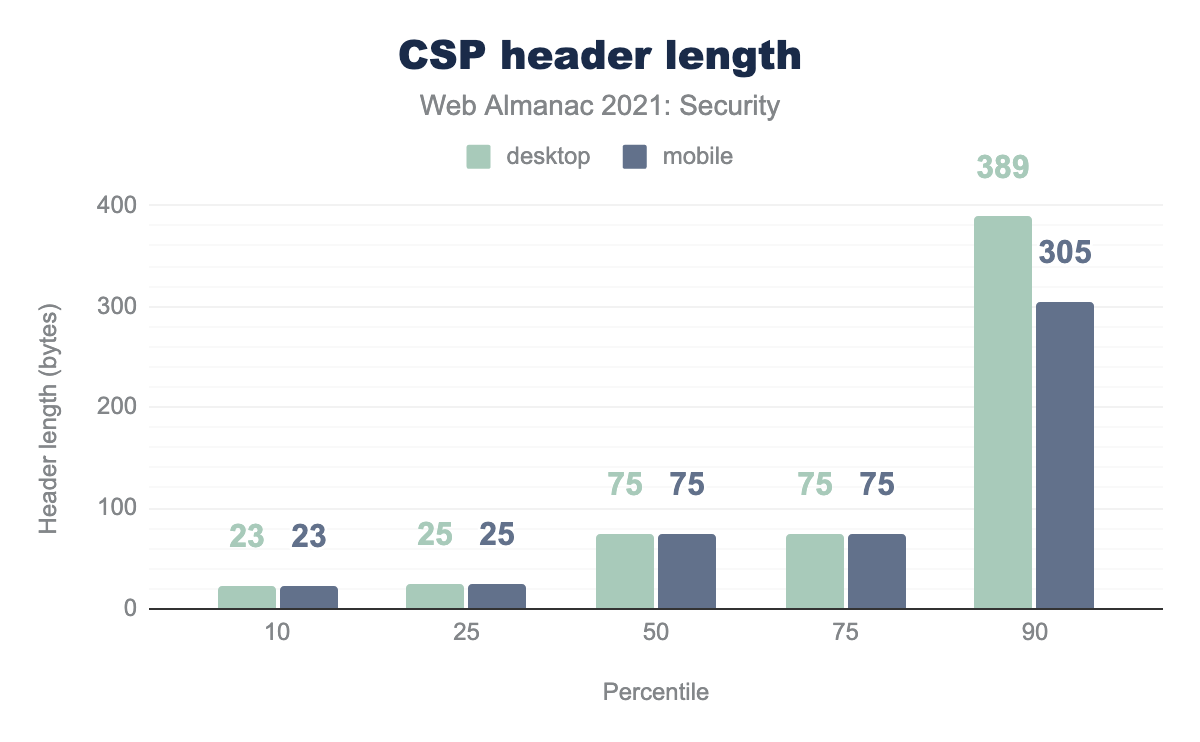

The median length of CSP headers continue to be pretty low: 75 bytes. Most websites still use single directives for specific purposes, instead of long strict CSPs. For instance, 24.2% of websites only have upgrade-insecure-requests directives.

On the other side of the spectrum, the longest CSP header is almost twice as long as last year’s longest CSP header: 43,488 bytes.

| Origin | Desktop | Mobile |

|---|---|---|

| https://www.google-analytics.com | 0.29% | 0.22% |

| https://www.googletagmanager.com | 0.26% | 0.22% |

| https://fonts.googleapis.com | 0.22% | 0.16% |

| https://fonts.gstatic.com | 0.20% | 0.15% |

| https://www.google.com | 0.19% | 0.14% |

| https://www.youtube.com | 0.19% | 0.13% |

| https://connect.facebook.net | 0.16% | 0.11% |

| https://stats.g.doubleclick.net | 0.15% | 0.11% |

| https://www.gstatic.com | 0.14% | 0.11% |

| https://cdnjs.cloudflare.com | 0.12% | 0.10% |

The most common origins used in *-src directives continue to be heavily dominated by Google (fonts, ads, analytics). We also see Cloudflare’s popular library CDN showing up in the 10th position this year.

Subresource Integrity

A lot of websites, load JavaScript libraries and CSS libraries from external CDNs. This can have certain security implications if the CDN is compromised, or an attacker finds some other way to replace the frequently used libraries. Subresource Integrity (SRI) helps in avoiding such consequences, though it introduces other risks if the website may not function without that resource for a non-malicious change. Self-hosting instead of loading from a third party is usually a safer option where possible.

Web developers can add the integrity attribute to <script> and <link> tags which are used to include JavaScript and CSS code to the website. The integrity attribute consists of a hash of the expected content of the resource. The browser can then compare the hash of the fetched content and hash mentioned in the integrity attribute to check its validity and only render the resource if they match.

<script src="https://code.jquery.com/jquery-3.6.0.min.js"

integrity="sha256-/xUj+3OJU5yExlq6GSYGSHk7tPXikynS7ogEvDej/m4="

crossorigin="anonymous"></script>The hash can be computed with three different algorithms: SHA256, SHA384, and SHA512. SHA384 (66.2% in mobile) is currently the most used, followed by SHA256 (31.1% in mobile). Currently, all three hashing algorithms are considered safe to use.

<script> elements for mobile.

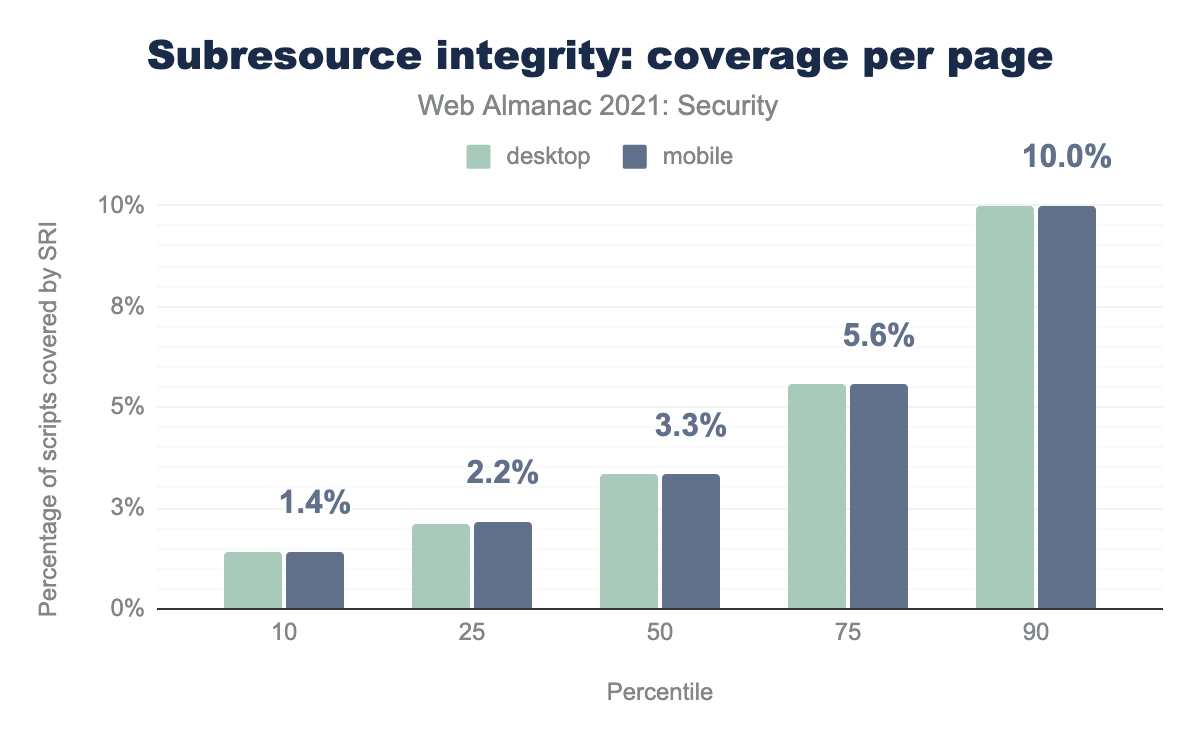

There has been some increase in the usage of SRI over the past couple of years, with 17.5% elements in desktop and 16.1% elements in mobile containing the integrity attribute. 82.6% of those were in the <script> element for mobile.

However, it still is a minority option for <script> elements. The median percentage of <script> elements on websites which have an integrity attribute is 3.3%.

| Host | Desktop | Mobile |

|---|---|---|

| www.gstatic.com | 44.3% | 44.1% |

| cdn.shopify.com | 23.4% | 23.9% |

| code.jquery.com | 7.5% | 7.5% |

| cdnjs.cloudflare.com | 7.2% | 6.9% |

| stackpath.bootstrapcdn.com | 2.7% | 2.7% |

| maxcdn.bootstrapcdn.com | 2.2% | 2.3% |

| cdn.jsdelivr.net | 2.1% | 2.1% |

Among the common hosts from which SRI-protected scripts are included, we see most of them are made up of CDNs. We see that there are three very common CDNs that are used by multiple websites when using different libraries: jQuery, cdnjs, and Bootstrap. It is probably not coincidental that all three of these CDNs have the integrity attribute in their example HTML code, so when developers use the examples to embed these libraries, they are ensuring that SRI-protected scripts are being loaded.

Permissions Policy

All browsers these days provide a myriad of APIs and functionalities, which can be used for tracking and malicious purposes, thus proving detrimental to the privacy of the users. Permissions Policy is a web platform API that gives a website the ability to allow or block the use of browser features in its own frame or in iframes that it embeds.

The Permissions-Policy response header allows websites to decide which features they want to use and also which powerful features they want to disallow on the website to limit misuse. A Permissions Policy can be used to control APIs like Geolocation, User media, Video autoplay, Encrypted media decoding and many more. While some of these APIs do require browser permission from the user—a malicious script can’t turn on the microphone without the user getting a permission pop up—it’s still good practice to use Permission Policy to restrict usage of certain features completely if they are not required by the website.

This API specification was previously known as Feature Policy but as well as the rename there have been many other updates. Though the Feature-Policy response header is still in use, it is pretty low with only 0.6% of websites in mobile using it. The Permissions-Policy response headers contains an allow list for different APIs. For example, Permissions-Policy: geolocation=(self "https://example.com") means that the website disallows the use of Geolocation API except for its own origin and those whose origin is “https://example.com”. One can disable the use of an API entirely in a website by specifying an empty list, e.g., Permissions-Policy: geolocation=().

We see 1.3% of websites on the mobile using the Permissions-Policy already. A possible reason for this higher than expected usage of this new header, could be some website admins choosing to opt-out of Federated Learning of Cohorts or FLoC (which was experimentally implemented in Chrome) to protect user’s privacy. The privacy chapter has a detailed analysis of this.

| Directive | Desktop | Mobile |

|---|---|---|

encrypted-media |

46.8% | 45.0% |

conversion-measurement |

39.5% | 36.1% |

autoplay |

30.5% | 30.1% |

picture-in-picture |

17.8% | 17.2% |

accelerometer |

16.4% | 16.0% |

gyroscope |

16.4% | 16.0% |

clipboard-write |

11.2% | 10.9% |

microphone |

4.3% | 4.5% |

camera |

4.2% | 4.4% |

geolocation |

4.0% | 4.3% |

allow directives on frames.

One can also use the allow attribute in <iframe> elements to enable or disable features allowed to be used in the embedded frame. 18.3% of 16.8 million frames in mobile contained the allow attribute to enable permission or feature policies.

As in previous years, the most used directives in allow attributes on iframes are still related to controls for embedded videos and media. The most used directive continues to be encrypted-media which is used to control access to the Encrypted Media Extensions API.

Iframe sandbox

An untrusted third-party in an iframe could launch a number of attacks on the page. For instance, it could navigate the top page to a phishing page, launch popups with fake anti-virus advertisements and other cross-frame scripting attacks.

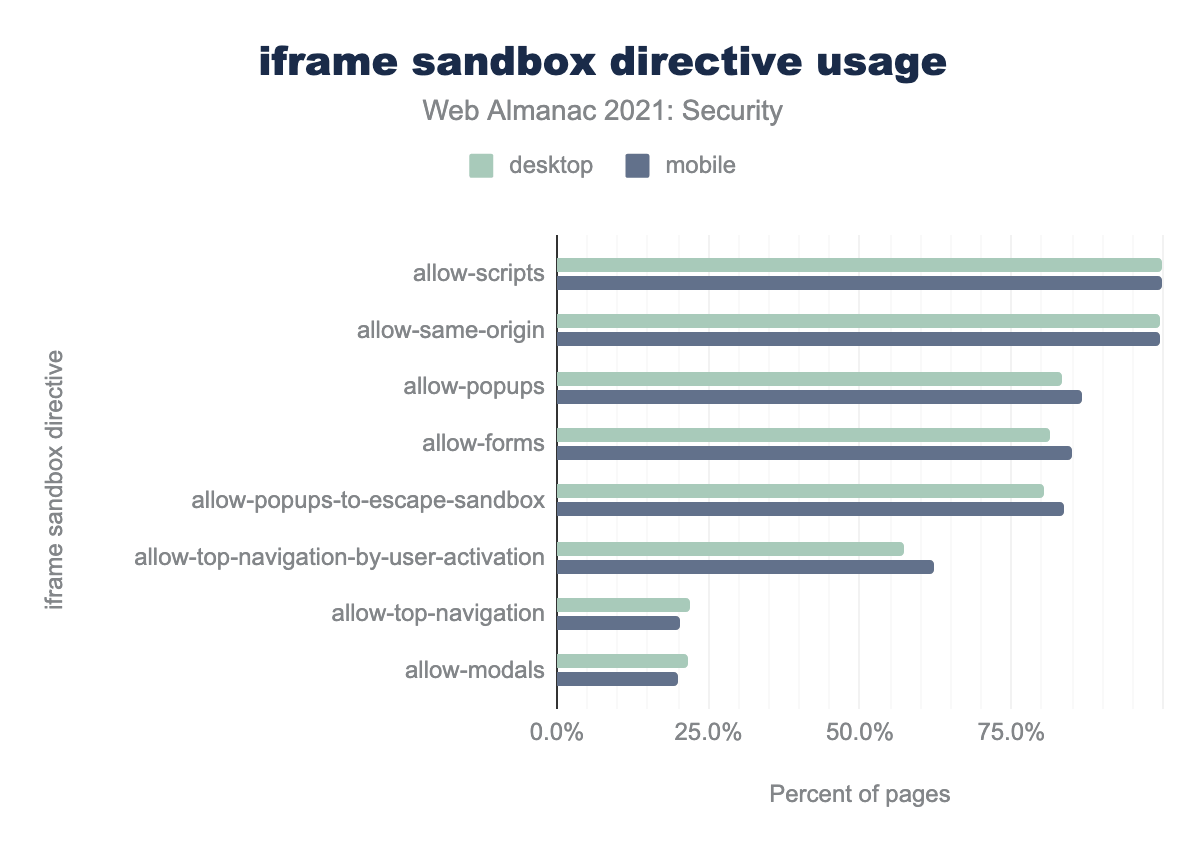

The sandbox attribute on iframes applies restrictions to the content, and therefore reduces the opportunities for launching attacks from the embedded web page. The value of the attribute can either be empty to apply all restrictions (the embedded page cannot execute any JavaScript code, no forms can be submitted, and no popups can be created, to name a few restrictions), or space-separated tokens to lift particular restrictions. As embedding third-party content such as advertisements or videos via iframes is common practice on the web, it is not surprising that many of these are restricted via the sandbox attribute: 19.7% of the iframes on desktop pages have a sandbox attribute while on mobile pages this is 21.0%.

allow-scrips and allow-same-origin are the most used directive with almost 100% of iframes having sandbox attributes using these directives. allow-popups is found in 83% frames in desktop and 87% frames in mobile, allow-forms is found in 81% frames in desktop and 85% frames in mobile, allow-popups-to-escape-sandbox is found in 80% frames in desktop and 84% frames in mobile, allow-top-navigation-by-user-activation is found in 57% frames in desktop and 62% frames in mobile, allow-top-navigation is found in 22% frames in desktop and 20% frames in mobile, and allow-modals is found in 21% frames in desktop and 20% frames in mobile.The most commonly used directive, allow-scripts, which is present in 99.98% of all sandbox policies on desktop pages, allows the embedded page to execute JavaScript code. The other directive that is present on virtually all sandbox policies, allow-same-origin, allows the embedded page to retain its origin and, for example, access cookies that were set on that origin.

Thwarting attacks

Web applications can be vulnerable to multiple attacks. Fortunately, there exist several mechanisms that can either prevent certain classes of vulnerabilities (e.g., framing protection through X-Frame-Options or CSP’s frame-ancestors directive is necessary to combat clickjacking attacks), or limit the consequences of an attack. As most of these protections are opt-in, they still need to be enabled by the web developers—typically by setting the correct response header. At large scale, the presence of the headers can tell us something about the security hygiene of websites and the incentives of the developers to protect their users.

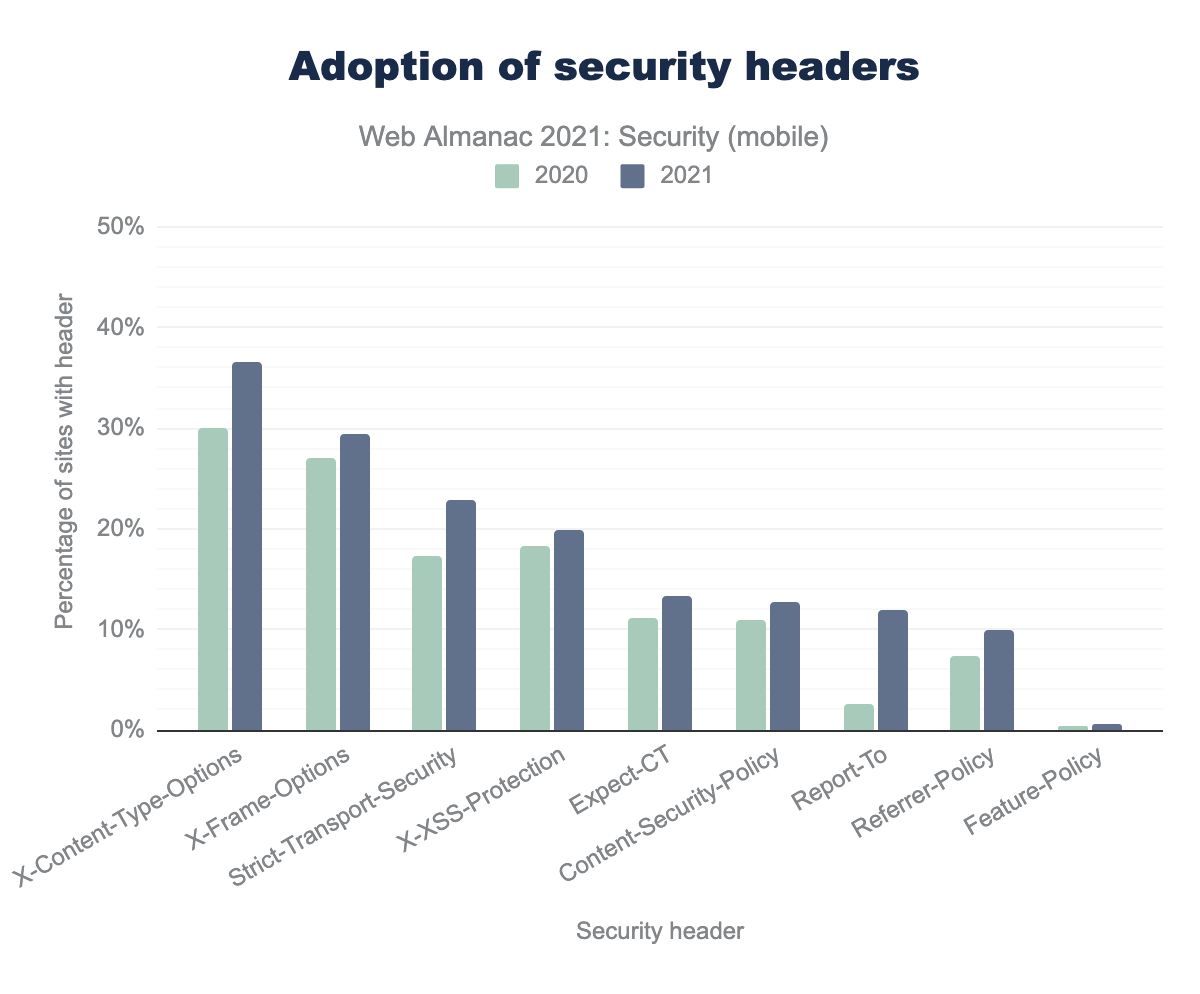

Security feature adoption

X-Content-Type-Options was 30.0% in 2020 and is 36.6% in 2021, X-Frame-Options was 27.0% in 2020 and is 29.4% in 2021, Strict-Transport-Security was 17.4% in 2020 and is 22.9% in 2021, X-XSS-Protection was 18.4% in 2020 and is 20.0% in 2021, Expect-CT was 11.1% in 2020 and are 13.4% in 2021, Content-Security-Policy was 10.9% in 2020 and 12.8% in 2021, Report-To was 2.5% in 2020 and is 11.9% in 2021, Referrer-Policy was 7.3% in 2020 and is 10.0% in 2021, Feature-Policy was 0.5% in 2020 and is 0.6% in 2021.Perhaps the most promising and uplifting finding of this chapter is that the general adoption of security mechanisms continues to grow. Not only does this mean that attackers will have a more difficult time exploiting certain websites, but it is also indicative that more and more developers value the security of the web products they build. Overall, we can see a relative increase in the adoption of security features of 10-30% compared to last year. The security-related mechanism with the most uptake is the Report-To header of the Reporting API, with almost a 4x increased adoption rate, from 2.6% to 12.2%.

Although this continued increase in the adoption rate of security mechanisms is certainly outstanding, there still remains quite some room for improvement. The most widely used security mechanism is still the X-Content-Type-Options header, which is used on 36.6% of the websites we crawled on mobile, to protect against MIME-sniffing attacks. This header is followed by the X-Frame-Options header, which is enabled on 29.4% of all sites. Interestingly, only 5.6% of websites use the more flexible frame-ancestors directive of CSP.

Another interesting evolution is that of the X-XSS-Protection header. The feature is used to control the XSS filter of legacy browsers: Edge and Chrome retired their XSS filter in July 2018 and August 2019 respectively as it could introduce new unintended vulnerabilities. Yet, we found that the X-XSS-Protection header was 8.5% more prevalent than last year.

Features enabled in <meta> element

In addition to sending a response header, some security features can be enabled in the HTML response body by including a <meta> element with the name attribute set to http-equiv. For security purposes, only a limited number of policies can be enabled this way. More precisely, only a Content Security Policy and Referrer Policy can be set via the <meta> tag. Respectively we found that 0.4% and 2.6% of the mobile sites enabled the mechanism this way.

X-Frame-Options in the <meta> tag, which is actually ignored by the browser.

When any of the other security mechanisms are set via the <meta> tag, the browser will actually ignore this. Interestingly, we found 3,410 sites that tried to enable X-Frame-Options via a <meta> tag, and thus were wrongly under the impression that they were protected from clickjacking attacks. Similarly, several hundred websites failed to deploy a security feature by placing it in a <meta> tag instead of a response header (X-Content-Type-Options: 357, X-XSS-Protection: 331, Strict-Transport-Security: 183).

Stopping XSS attacks via CSP

CSP can be used to protect against a multitude of things: clickjacking attacks, preventing mixed-content inclusion and determining the trusted sources from which content may be included (as discussed above).

Additionally, it is an essential mechanism to defend against XSS attacks. For instance, by setting a restrictive script-src directive, a web developer can ensure that only the application’s JavaScript code is executed (and not the attacker’s). Moreover, to defend against DOM-based cross-site scripting, it is possible to use Trusted Types, which can be enabled by using CSP’s require-trusted-types-for directive.

| Keyword | Desktop | Mobile |

|---|---|---|

strict-dynamic |

5.2% | 4.5% |

nonce- |

12.1% | 17.6% |

unsafe-inline |

96.2% | 96.5% |

unsafe-eval |

82.9% | 77.2% |

default-src or script-src directive.

Although we saw an overall moderate increase (17%) in the adoption of CSP, what is perhaps even more exciting is that the usage of the strict-dynamic and nonces is either keeping the same trend or is slightly increasing. For instance, for desktop sites the use of strict-dynamic grew from 2.4% last year, to 5.2% this year. Similarly, the use of nonces grew from 8.7% to 12.1%.

On the other hand, we find that the usage of the troubling directives unsafe-inline and unsafe-eval is still fairly high. However, it should be noted that if these are used in conjunction with strict-dynamic, modern browsers will ignore these values, while older browsers without strict-dynamic support can still continue to use the website.

Defending against XS-Leaks

Various new security features have been introduced to allow web developers to defend their websites against micro-architectural attacks, such as Spectre, and other attacks that are typically referred to as XS-Leaks. Given that many of these attacks were only discovered in the last few years, the mechanisms used to tackle them obviously are very recent as well, which might explain the relatively low adoption rate. Nevertheless, compared to last year, the cross-origin policies have significantly increased in adoption.

The Cross-Origin-Resource-Policy, which is used to indicate to the browser how a resource should be included (cross-origin, same-site or same-origin), is now present on 106,443 (1.5%) sites, up from 1,712 sites last year. The most likely explanation for this is that cross-origin isolation is a requirement for using features such as SharedArrayBuffer and high-resolution timers and that requires setting the site’s Cross-Origin-Embedder-Policy to require-corp. In essence, this requires all loaded subresources to set the Cross-Origin-Resource-Policy response header for those sites wishing to use those features.

Consequently, several CDNs now set the header with a value of cross-origin (as CDN resources are typically meant to be included in a cross-site context). We can see that this is indeed the case, as 96.8% of sites set the CORP header value to cross-origin, compared to 2.9% that set it to same-site and 0.3% that use the more restrictive same-origin.

With this change, it is no surprise that the adoption of Cross-Origin-Embedder-Policy is also steadily increasing: in 2021, 911 sites enabled this header—significantly more than the 6 sites of last year. It will be interesting to see how this will further develop next year!

Finally, another anti-XS-Leak header, Cross-Origin-Opener-Policy, has also seen a significant boost compared to last year. We found 15,727 sites that now enable this security mechanism, which is a significant increase compared to last year when only 31 sites were protected from certain XS-Leak attacks.

Web Cryptography API

Security has become one of the central issues in web development. The Web Cryptography API W3C recommendation was introduced in 2017 to perform basic cryptographic operations (e.g., hashing, signature generation and verification, and encryption and decryption) on the client-side, without any third-party library. We analyzed the usage of this JavaScript API.

| Cryptography API | Desktop | Mobile |

|---|---|---|

CryptoGetRandomValues |

70.4% | 67.4% |

SubtleCryptoDigest |

0.4% | 0.5% |

SubtleCryptoEncrypt |

0.4% | 0.3% |

CryptoAlgorithmSha256 |

0.3% | 0.3% |

SubtleCryptoGenerateKey |

0.3% | 0.2% |

CryptoAlgorithmAesGcm |

0.2% | 0.2% |

SubtleCryptoImportKey |

0.2% | 0.2% |

CryptoAlgorithmAesCtr |

0.1% | < 0.1% |

CryptoAlgorithmSha1 |

0.1% | 0.1% |

CryptoAlgorithmSha384 |

0.1% | 0.2% |

The popularity of the functions remains almost the same as the previous year: we record only a slight increase of 0.7% (from 71.8% to 72.5%). Again, this year Cypto.getRandomValues is the most popular cryptography API. It allows developers to generate strong pseudo-random numbers. We still believe that Google Analytics has a major effect on its popularity since the Google Analytics script utilizes this function.

It should be noted that since we perform passive crawling, our results in this section will be limited by not being able to identify cases where any interaction is required before the functions are executed.

Utilizing bot protection services

Many cyberattacks are based on automated bot attacks and interest in it seems to have increased. According to the Bad Bot Report 2021 by Imperva, the number of bad bots has increased this year by 25.6%. Note that the increase from 2019 to 2020 was 24.1%—according to the previous report. In the following table, we present our results on using measures by websites to protect themselves from malicious bots.

| Service provider | Desktop | Mobile |

|---|---|---|

| reCAPTCHA | 10.2% | 9.4% |

| Imperva | 0.3% | 0.3% |

| Sift | 0.1% | 0.1% |

| Signifyd | 0.03% | 0.03% |

| hCaptcha | 0.03% | 0.02% |

| Forter | 0.03% | 0.03% |

| TruValidate | 0.03% | 0.02% |

| Akamai Web Application Protector | 0.02% | 0.02% |

| Kount | 0.02% | 0.02% |

| Konduto | 0.02% | 0.02% |

| PerimeterX | 0.02% | 0.01% |

| Tencent Waterproof Wall | 0.01% | 0.01% |

| Others | 0.03% | 0.04% |

Our analysis shows that under 10.7% of desktop websites, and 9.9% of mobile websites use a mechanism to fight malicious bots. Last year those numbers were 8.3% and 7.3%, so this is approximately a 30% increase compared to the previous year. This year, too, we identified more bot protection mechanisms for desktop versions than mobile versions (10.8% vs. 9.9%)

We also see new popular players as bot protection providers in our dataset (e.g., hCaptcha).

Drivers of security mechanism adoption

There are many different influences that might cause a website to invest more in their security posture. Examples of such factors are societal (e.g., more security-oriented education in certain countries, or laws that take more punitive measures in case of a data breach), technological (e.g., it might be easier to adopt security features in certain technology stacks, or certain vendors might enable security features by default), or threat-based (e.g., widely popular websites may face more targeted attacks than a website that is little known). In this section, we try to assess to what extent these factors influence the adoption of security features.

Where website’s visitors connect from

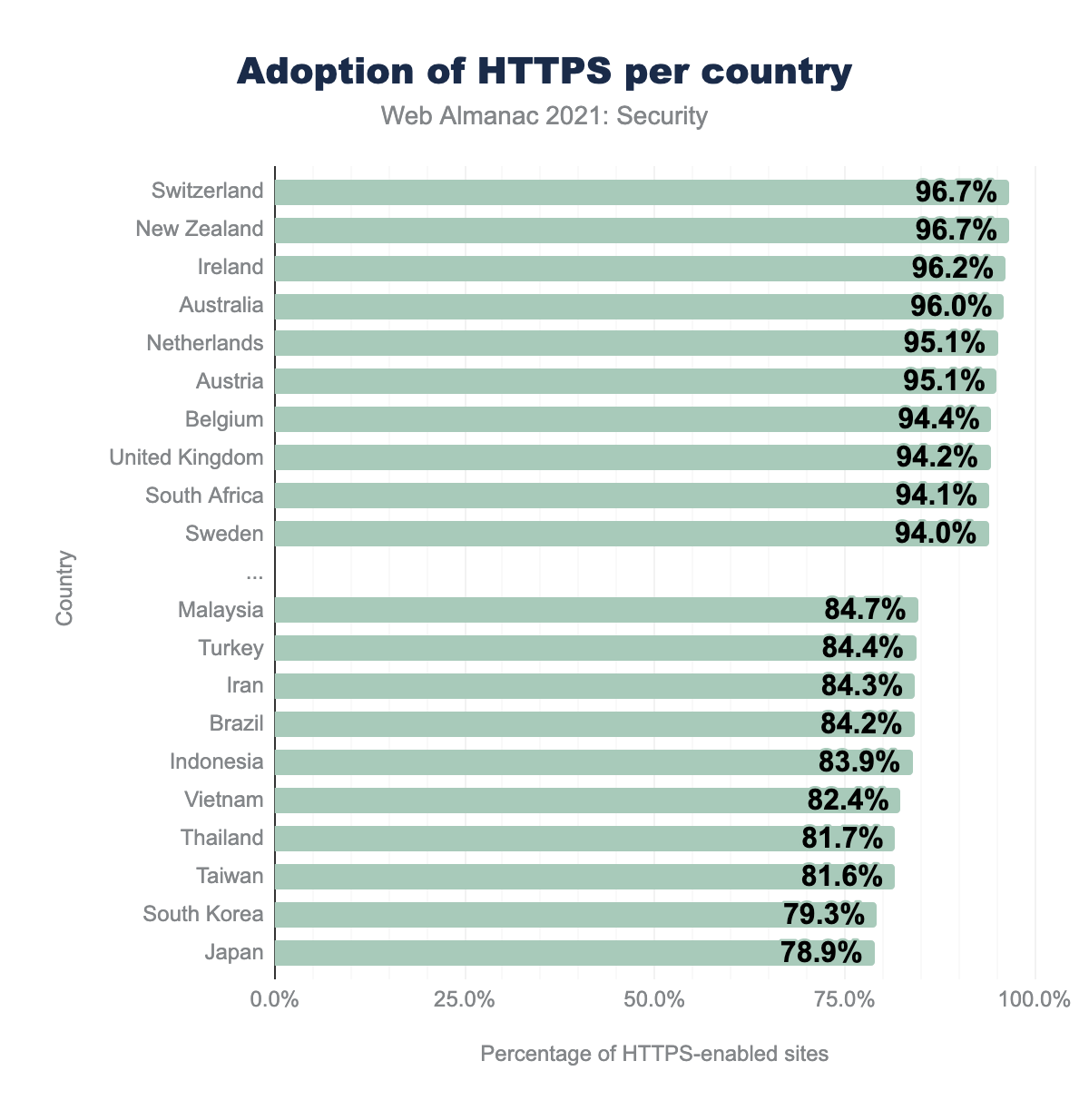

Although we can see that the adoption of HTTPS-by-default is generally increasing, there is still a discrepancy in adoption rate between sites depending on the country most of the visitors originate from.

We find that compared to last year, the Netherlands has now made it into the top 5, which means that the Dutch are relatively more protected against transport layer attacks: 95.1% of the sites frequently visited by people in the Netherlands has HTTPS enabled (compared to 93.0% last year). In fact, not only the Netherlands improved in the adoption of HTTPS; we find that virtually every country improved in that regard.

It is also very encouraging to see that several of the countries that performed worst last year, made a big leap. For instance, 13.4% more sites visited by people from Iran (the strongest riser with regards to HTTPS adoption) are now HTTPS-enabled compared to last year (from 74.3% to 84.3%). Although the gap between the best-performing and least-performing countries is becoming smaller, there are still significant efforts to be made.

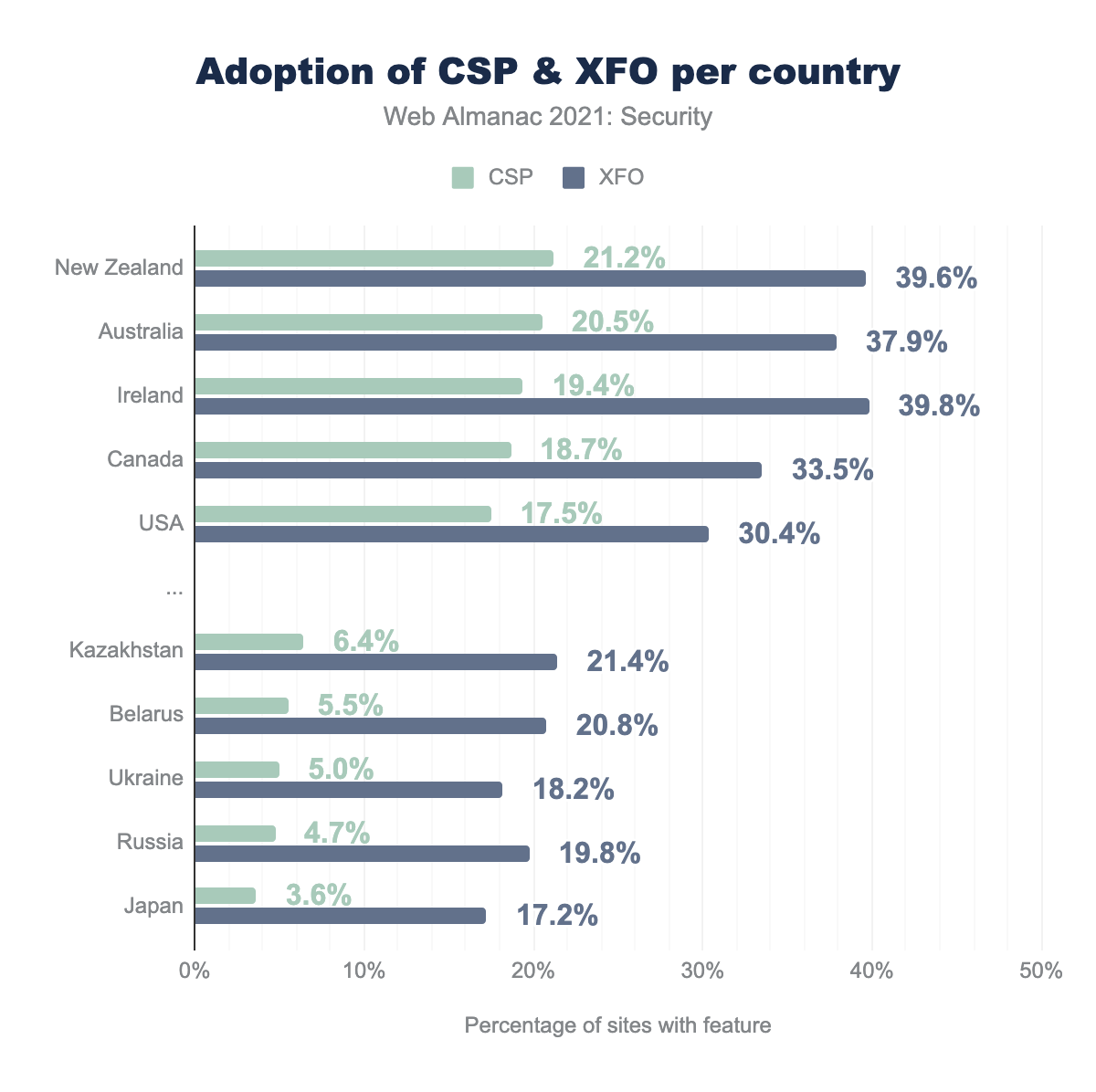

When looking at the adoption of certain security features such as CSP and X-Frame-Options, we can see an even more pronounced difference between the different countries, where the sites from top-scoring countries are 2-4 times more likely to adopt these security features compared to the least-performing countries. We also find that countries that perform well on HTTPS adoption tend to also perform well on the adoption of other security mechanisms. This is indicative that security is often thought of holistically, where all different angles need to be covered. And rightfully so: an attacker just needs to find a single exploitable vulnerability whereas developers need to ensure that every aspect is tightly protected.

Technology stack

| Technology | Security features enabled by default |

|---|---|

| Automattic (PaaS) | Strict-Transport-Security (97.8%) |

| Blogger (Blogs) |

X-Content-Type-Options (99.6%), X-XSS-Protection (99.6%) |

| Cloudflare (CDN) | Expect-CT (93.1%), Report-To (84.1%) |

| Drupal (CMS) |

X-Content-Type-Options (77.9%),

X-Frame-Options (83.1%) |

| Magento (E-commerce) | X-Frame-Options (85.4%) |

| Shopify (E-commerce) |

Content-Security-Policy (96.4%), Expect-CT (95.5%), Report-To (95.5%), Strict-Transport-Security (98.2%), X-Content-Type-Options (98.3%), X-Frame-Options (95.2%), X-XSS-Protection (98.2%) |

| Squarespace (CMS) |

Strict-Transport-Security (87.9%), X-Content-Type-Options (98.7%) |

| Sucuri (CDN) |

Content-Security-Policy (84.0%), X-Content-Type-Options (88.8%), X-Frame-Options (88.8%), X-XSS-Protection (88.7%) |

| Wix (Blogs) |

Strict-Transport-Security (98.8%), X-Content-Type-Options (99.4%) |

Another factor that can strongly influence the adoption of certain security mechanisms is the technology stack that’s being used to build a website. In some cases, security features may be enabled by default, or for some blogging systems the control over the response headers may be out of the hands of the website owner and a platform-wide security setting may be in place.

Alternatively, CDNs may add additional security features, especially when these concern the transport security. In the above table, we’ve listed the nine technologies that are used by at least 25,000 sites, and that have a significantly higher adoption rate of specific security mechanisms. For instance, we can see that sites that are built with the Shopify e-commerce system have a very high (over 95%) adoption rate for seven security-relevant headers: Content-Security-Policy, Expect-CT, Report-To, Strict-Transport-Security, X-Content-Type-Options, X-Frame-Options, and X-XSS-Protection.

It is great to see that despite the variability in these content that use these technologies, it is still possible to uniformly adopt these security mechanisms.

Another interesting entry in this list is Drupal, whose websites have an adoption rate of 83.1% for the X-Frame-Options header (a slight improvement compared to last year’s 81.8%). As this header is enabled by default, it is clear that the majority of Drupal sites stick with it, protecting them from clickjacking attacks. Note that, while it makes sense to keep the X-Frame-Options header for compatibility with older browsers in the near term, site owners should consider transitioning to the recommended Content-Security-Policy header directive frame ancestors for the same functionality.

An important aspect to explore in the context of the adoption of security features, is the diversity. For instance, as Cloudflare is the largest CDN provider, powering millions of websites (see the CDN chapter for further analysis on this). Any feature that Cloudflare enables by default will result in a large overall adoption rate. In fact, 98.2% of the sites that employ the Expect-CT feature are powered by Cloudflare, indicating a fairly limited distribution in the adoption of this mechanism.

However, overall, we find that this phenomenon of a single actor like a Drupal or Cloudflare being a top technological driver of a security feature’s adoption is an outlier and appears less common over time. This means that an increasingly diverse set of websites is adopting security mechanisms, and that more and more web developers are becoming aware of their benefits. For example, last year 44.3% of the sites that set a Content Security Policy were powered by Shopify, whereas this year, Shopify is only responsible for 32.9% of all sites that enable CSP. Combined with the generally growing adoption rate, this is great news!

Website popularity

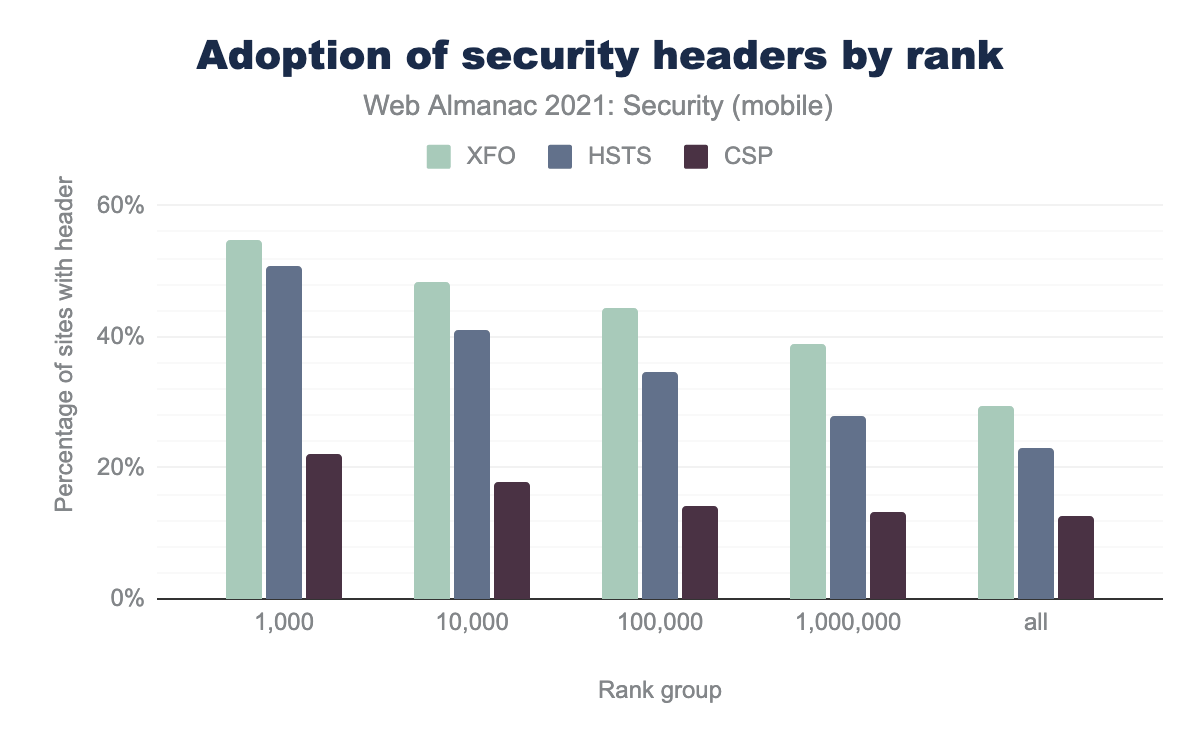

Websites that have many visitors may be more prone to targeted attacks given that there are more users with potentially sensitive data to attract attackers. Therefore, it can be expected that widely visited websites invest more in security in order to safeguard their users. To evaluate whether this hypothesis is valid, we used the ranking provided by the Chrome User Experience Report, which uses real-world user data to determine which websites are visited the most (ranked by top 1k, 10k, 100k, 1M and all sites in our dataset).

We can see that the adoption of certain security features, X-Frame-Options (XFO), Content Security Policy (CSP), and Strict Transport Security (HSTS), is highly related to the ranking of sites. For instance, the 1,000 top visited sites are almost twice as likely to adopt a certain security header compared to the overall adoption. We can also see that the adoption rate for each feature is higher for higher-ranked websites.

We can draw two conclusions from this: on the one hand, having better “security hygiene” on sites that attract more visitors benefits a larger fraction of users (who might be more inclined to share their personal data with well-known trusted sites). On the other hand, the lower adoption rate of security features on less-visited sites could be indicative that it still requires a substantial investment to (correctly) implement these features. This investment may not always be feasible for smaller websites. Hopefully, we will see a further increase in security features that are enabled by default in certain technology stacks, which could further enhance the security of many sites without requiring too much effort from web developers.

Malpractices on the web

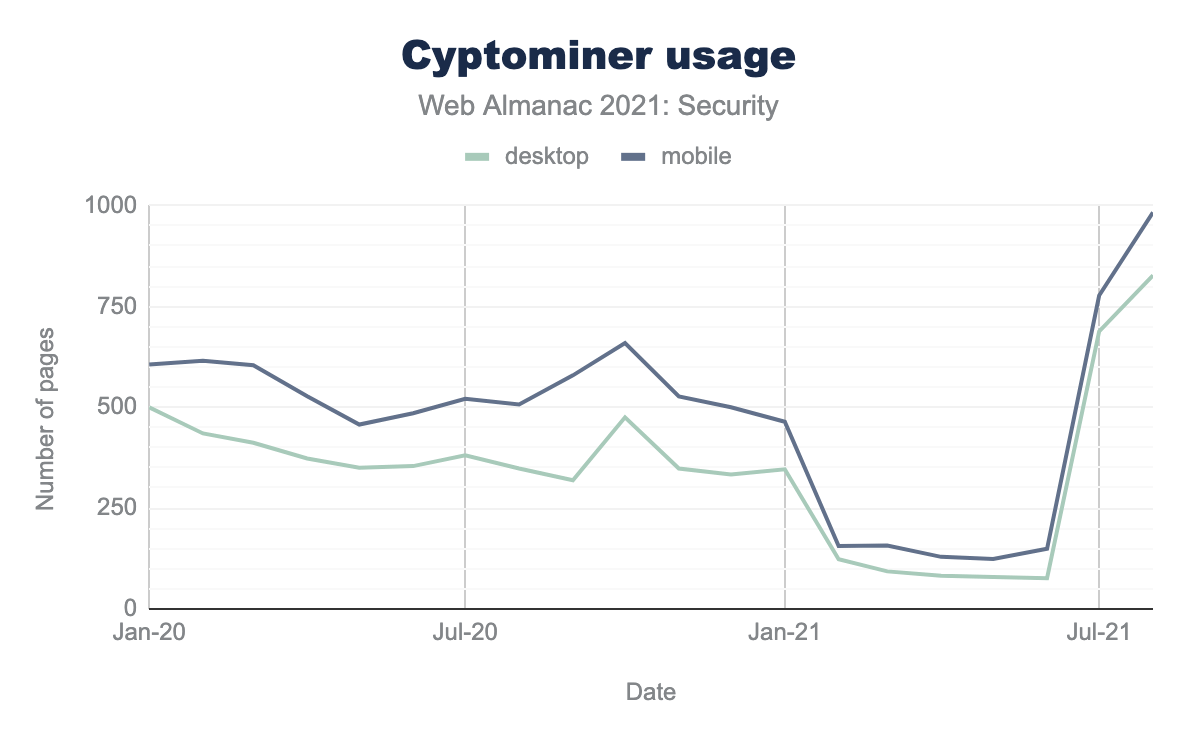

Cryptocurrencies have become an increasingly familiar part of our modern community. Global cryptocurrency adoption has been skyrocketing since the beginning of the pandemic. Due to its economic efficiency, cybercriminals have also become more interested in cryptocurrencies. That has led to the creation of a new attack vector: cryptojacking. Attackers have discovered the power of WebAssembly and exploited it to mine cryptocurrencies while website visitors surf on a website.

We now show our findings in the following figure regarding cryptominer usage on the web.

According to our dataset, until recently, we found a very stable decrease in the number of websites with Cryptominer. However, we are now seeing that the number of such websites has increased more than tenfold in the past two months. Such picks are very typical, for example, when widespread cryptojacking attacks take place or when a popular JS library has been infected.

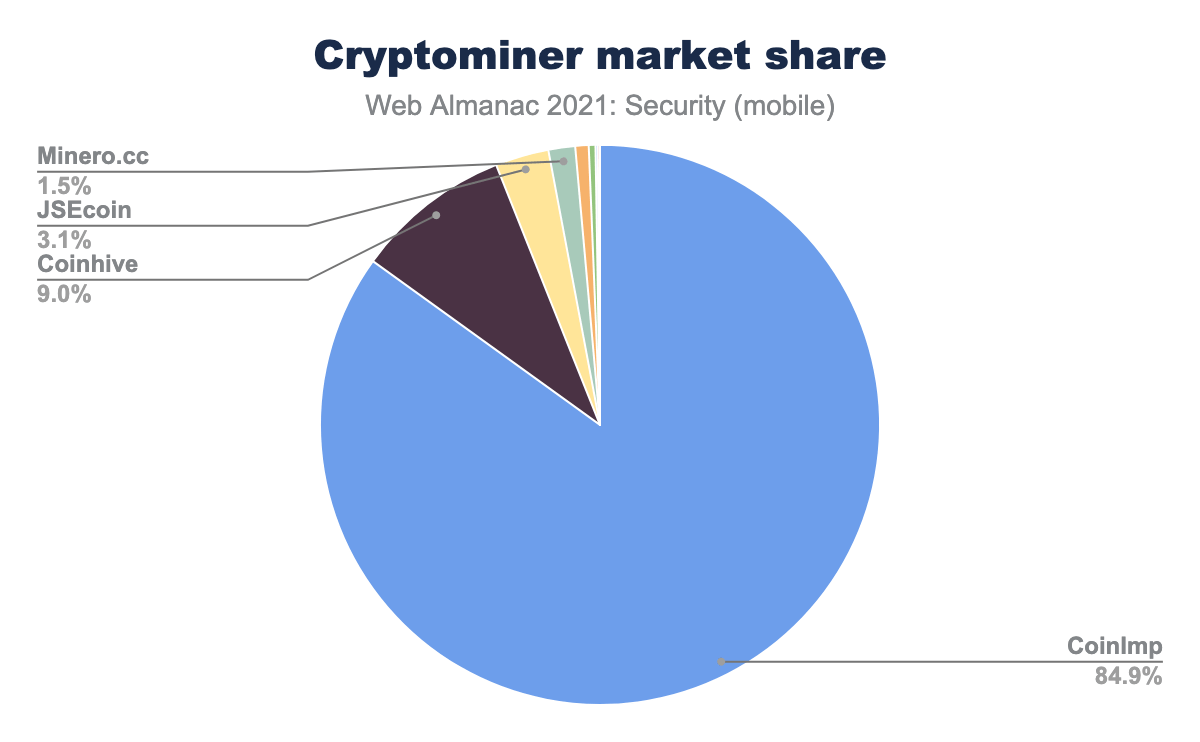

We now turn to cryptominer market share in the following figure.

We see that Coinhive has been surpassed by CoinImp as the dominant cryptomining service. One of the main reasons for this was that Coinhive was shutdown in March 2019. Interestingly, the domain is now owned by Troy Hunt who is now displaying aggressive banners on the website in an effort to make those sites still hosting the Coinhive script (Desktop: 5.7%, mobile: 9.0%) aware that they are—often without their knowledge. This reflects both the prevalence of Coinhive scripts even over two years after ceasing to operate, and the risks of hosting third-party resources that can be taken over should that third party cease to operate. With Coinhive’s demise, CoinImp has clearly become the market leader (84.9% share).

Our results suggest that cryptojacking is still a serious attack vector, and necessary measures should be used for it.

Note that not all of these websites are infected. Website operators may also deploy this technique (instead of showing ads) to finance their website. But the use of this technique is also heavily discussed technically, legally, and ethically.

Please also note that our results may not show the actual state of the websites infected with cryptojacking. Since we run our crawler once a month, not all websites that run cryptominer can be discovered. This is the case, for example, if a website remains infected for only X days and not on the day our crawler ran.

security.txt

security.txt is a file format for websites to provide a standard for vulnerability reporting. Website providers can provide contact details, PGP key, policy, and other information in this file. White hat hackers can then use this information to conduct security analyses on these websites or report a vulnerability.

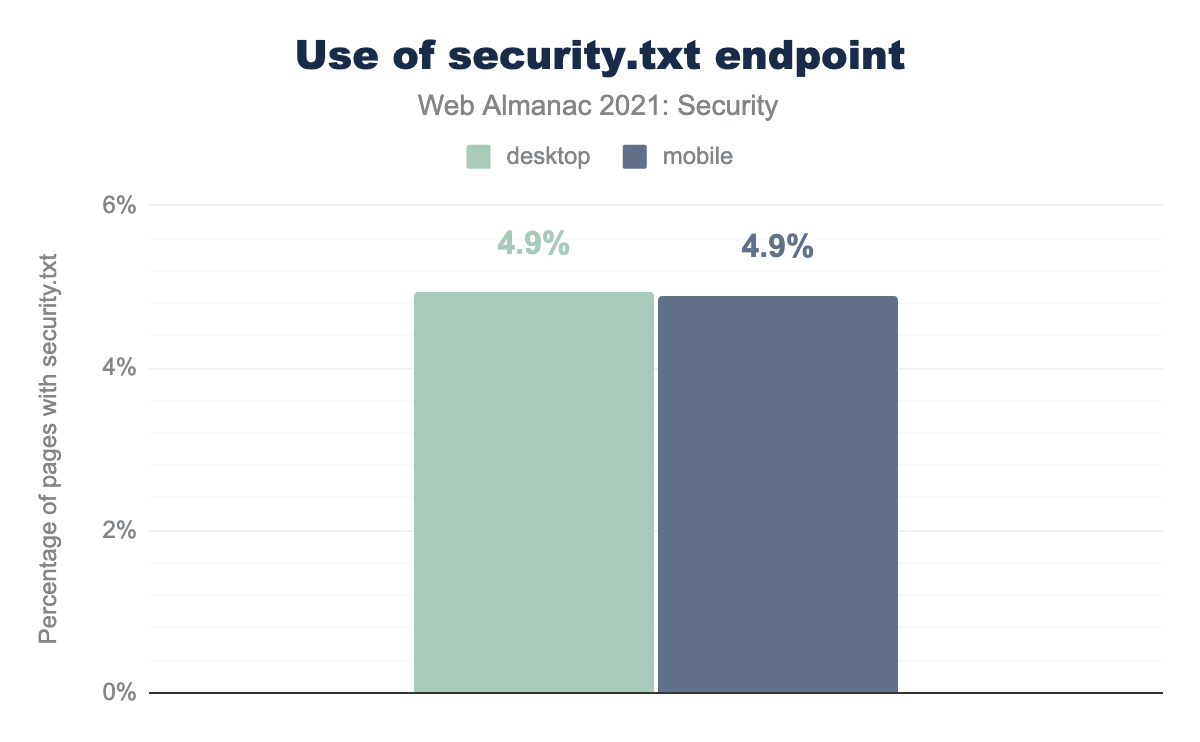

security.txt endpoint.security.txt.

We see that just under 5% of the websites return a response when asking for the /.well-known/security.txt URL. However investigating many of these show they are basically 404 pages that are incorrectly returning a 200 status code so usage is likely much lower.

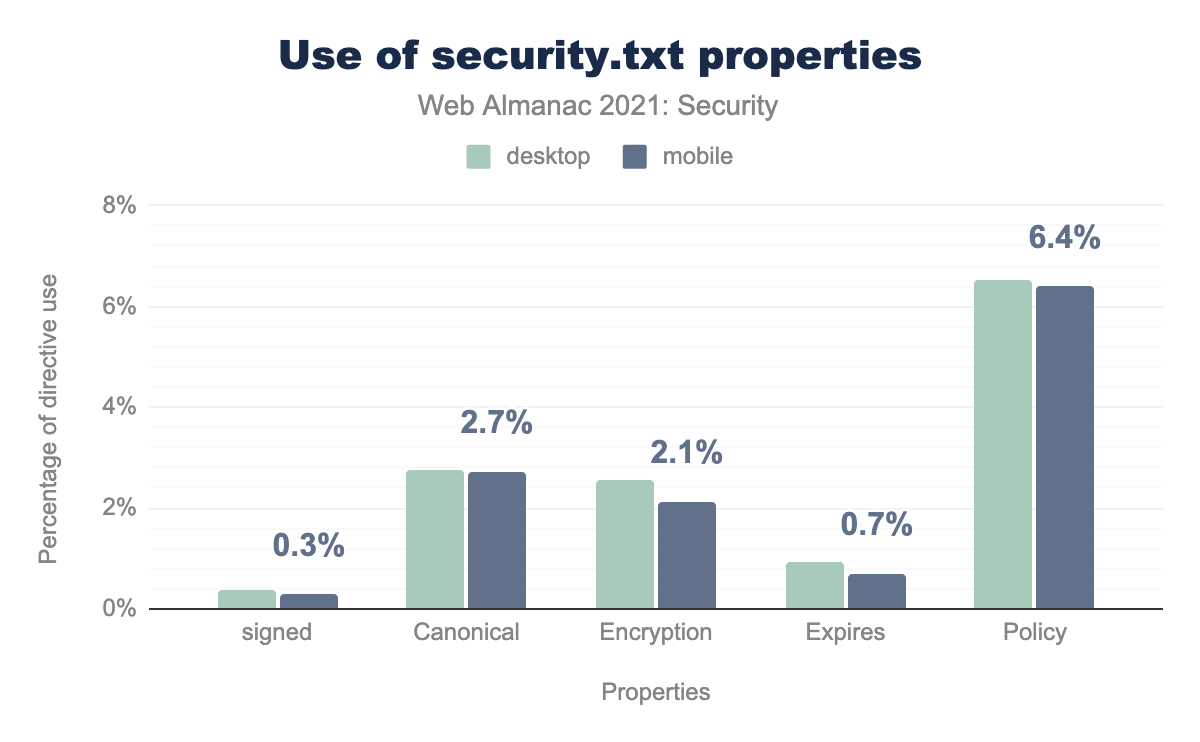

security.txt. 0.4% of desktop and 0.3% of mobile security.txt files are signed, Canonical is used in for 2.8% in desktop and 2.7% in mobile, Encryption is 2.6% in desktop and 2.1% in mobile, Expires is 0.9% in desktop and 0.7% in mobile, Policy is 6.5% in desktop and 6.4% in mobile.We see that Policy is the most used property in the security.txt files, but even then it’s only used in 6.4% of sites with a security.txt URL. This property includes a link to the vulnerability disclosure policy for the website that helps researchers understand the reporting practices they need to follow. This is therefore likely a better indicator of the real usage of security.txt since most file are expected to have a Policy value, meaning likely closer to 0.3% of all sites have a “real” security.txt file, rather than the 5% measured above.

Another interesting point is that when we look at just this subset of “real” security.txt URLs, Tumblr makes up 63%-65% of the usage. It looks like this is set by default for these domains to the Tumblr contact details. This is great on one hand to show how a single platform can drive adoption of these new security features, but on the other hand indicates a further reduction in actual site usage.

The other most used properties include Canonical and Encryption. Canonical is used to indicate where the security.txt file is located. If the URI used to retrieve the security.txt file doesn’t match the list URIs in the Canonical fields, then the contents of the file should not be trusted. Encryption provides the security researchers with an encryption key that they can use for encrypted communication.

Conclusion

Our analysis shows that the situation of web security concerning the provider side is improving compared to previous years. For example, we see that the use of HTTPS has increased by almost 10% in the last 12 months. We also find an increase in the protection of cookies and the use of security headers.

These increases indicate we are moving safer web environment, but they do not mean our web is secure enough today. We still have to improve our situation. For example, we believe that the web community should value security headers more. These are very effective extensions to protect web environments and web users from possible attacks.

The bot protection mechanisms can also be adopted more to protect the platforms from malicious bots. Furthermore, our analysis from last year and another study using the HTTP Archive dataset about the update behavior of websites showed that the website components are not diligently maintained, which increases the attack surface on web environments.

We should not forget that attackers are also working diligently to develop new techniques to bypass the security mechanisms we adopt.

With our analysis, we have tried to crystallize an overview of the security of our web. As extensive as our investigation is, our methodology only allows us to see a subset of all aspects of modern web security. For example, we do not know what additional measures a site may employ to mitigate or prevent attacks such as Cross-Site-Request-Forgery (CSRF) or certain types of Cross-Site-Scripting (XSS). As such, the picture portrayed in this chapter is incomplete yet a solid directional signal of the status of web security today.

The takeaway from our analysis is that we, the web community, must continue to invest more interest and resources in making our web environments much safer—in the hope of better and safer tomorrow for all.